During the last BruCON edition, I grabbed some statistics about the network usage of our visitors. Every years, I generate stats like the operating systems types, the top-used protocols, the numbers of unique MAC addresses, etc. But this year, we also collected all traffic from the public network. By “public“, I mean the free Wi-Fi offered to visitors during the two days. The result was two big files of ~50 Gibabytes each (one per day). This blog post is a post-analyzis of those files.

Disclaimer: The collected data (with full payload) have been used for research purposes only and were destroyed after the analysis process. Only TCP headers have been archived (as every years) for legal purposes.

Once the data collected, two issues arised. The first issue was purely technical: how to handle 100+ GB of PCAP data!? Don’t even think starting Wireshark. The way it handles PCAP files will make it collapse immediately. They are very good forensic tools which can “replay” the sessions from a PCAP but, again, free versions are not able to handle so much data. Last resort: call the NSA? They know how to handle Petabytes of data? The second issue was: what to look for?

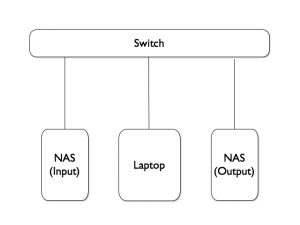

The performance issue was solved with two NAS (1TB each) and a Linux laptop (4 cores & 8GB of memory). The first NAS was loaded with the PCAP files and used for only read operations. The second one was the target for all output files (only write operations). All devices connected to a dedicated switch as seen in the schema below:

I decided to parse the PCAP files using multiple tools. The first one is the Bro IDS It can decode most protocols and create logs that could be easily parsed later. It also has a nice feature: the extraction of files from network flows.

Let’s start with the basic but critical and so controversed network service: DNS. Resolvers were provided by the DHCP server. Here are some statistics for the first day only:

- 339K DNS requests send to 124 unique DNS servers

- The BruCON DNS received 62.83% of all requests

- The Google DNS received less than 1%

- OpenDNS was unused (31 requests)

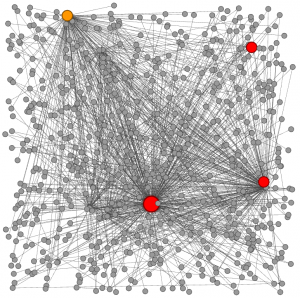

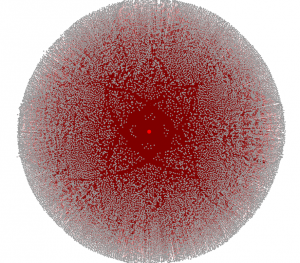

We saw a lot of DNS requests sent to public servers but also to servers in the RFC1918 range. We suppose that some devices were configured with static DNS servers. Here is a map of the DNS traffic for the first day: The big red circle is our official DNS (10.4.0.1), the small red circles are the Google DNS (8.8.8.8 – 8.8.4.4) and the yellow one is ff02:ff used by multicast DNS (UDP/5353).

What about SSH? This remote administration tool is well-know by infosec guys. If can also be used to start tunnel, transfer files. We detected 380 outgoing SSH sessions. If most people use the standard port (TCP/22), we detected other ports too:

- 443

- 2222

- 11302

- 40000

- 42017

- 44444

- 61222

Which SSH daemons were listening on the other side?

- SSH-2.0-dropbear_2012.55

- SSH-2.0-OpenSSH_4.3

- SSH-2.0-OpenSSH_4.3p2 Debian-9etch3

- SSH-2.0-OpenSSH_4.7p1 Debian-8ubuntu3

- SSH-2.0-OpenSSH_5.1p1 Debian-5

- SSH-2.0-OpenSSH_5.3

- SSH-2.0-OpenSSH_5.3p1 Debian-3ubuntu7

- SSH-2.0-OpenSSH_5.5p1

- SSH-2.0-OpenSSH_5.5p1 Debian-6+squeeze2

- SSH-2.0-OpenSSH_5.5p1 Debian-6+squeeze3

- SSH-2.0-OpenSSH_5.8p1 Debian-1ubuntu3

- SSH-2.0-OpenSSH_5.9p1

- SSH-2.0-OpenSSH_5.9p1 Debian-5ubuntu1

- SSH-2.0-OpenSSH_5.9p1 Debian-5ubuntu1.1

- SSH-2.0-OpenSSH_5.9p1 Debian-5ubuntu1+github5

- SSH-2.0-OpenSSH_5.9p1-hpn13v11lpk

- SSH-2.0-OpenSSH_6.0p1 Debian-2

- SSH-2.0-OpenSSH_6.0p1 Debian-3

- SSH-2.0-OpenSSH_6.0p1 Debian-4

- SSH-2.0-OpenSSH_6.1

- SSH-2.0-OpenSSH_6.1p1 Debian-4

- SSH-2.0-OpenSSH_6.2

- SSH-2.0-OpenSSH_6.3

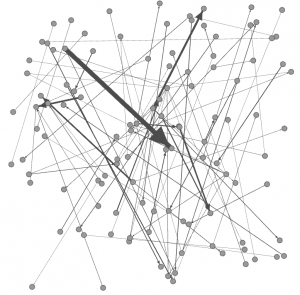

The graph below represents the SSH connections space:

More and more web services are available over SSL by default (Google, Twitter, Facebook). Let’s have a look at the top-20 destinations for SSL connections:

| SSL Connections | Service |

| 13730 | google.com |

| 12994 | twitter.com |

| 6255 | t.co |

| 6164 | api.twitter.com |

| 6127 | api.tweetdeck.com |

| 4271 | si0.twimg.com |

| 3242 | fbstatic-a.akamaihd.net |

| 3220 | www.google.com |

| 2986 | cl2.apple.com |

| 2324 | ssl.gstatic.com |

| 2193 | gmail.com |

| 2119 | imap.gmail.com |

| 2114 | gs-loc.apple.com |

| 2074 | itunes.apple.com |

| 2038 | fbcdn-profile-a.akamaihd.net |

| 1950 | api.facebook.com |

| 1926 | courier.push.apple.com |

| 1856 | keyvalueservice.icloud.com |

| 1847 | graph.facebook.com |

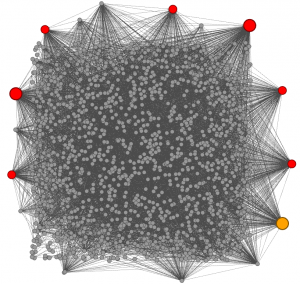

The graph below gives an overview of the SSL traffic from IP addresses to TLD’s:

Now, let’s have a look at the files downloaded by attendees. Bro has a very nice feature: it can extract files from network flows. To achieve this, just run a standard Bro instance with this extract .bro script passed in argument:

global ext_map: table[string] of string = {

["application/x-dosexec"] = "exe",

["application/pdf"] = "pdf",

["application/pgp-keys"] = "key",

["application/msword"] = "doc",

["application/msaccess"] = "mdb",

["application/vnd.openxmlformats-officedocument.spreadsheetml.sheet"] = "xlsx",

["application/vnd.openxmlformats-officedocument.wordprocessingml.document"] = "docx",

["application/vnd.ms-powerpoint"] = "ppt",

["application/vnd.ms-excel"] = "xls",

["application/java-archive"] = "jar",

["application/rar"] = "rar",

["application/zip"] = "zip",

["application/xml"] = "xml",

["application/x-gtar"] = "tar",

["application/x-gtar-compressed"] = "tgz",

["text/plain"] = "txt",

["image/jpeg"] = "jpg",

["image/png"] = "png",

["image/x-icon"] = "ico",

["image/gif"] = "gif",

["text/html"] = "html",

["text/x-src"] = "c",

} &default ="";

event file_new(f: fa_file)

{

local ext = "";

if ( f?$mime_type )

ext = ext_map[f$mime_type];

local fname = fmt("%s-%s.%s", f$source, f$id, ext);

Files::add_analyzer(f, Files::ANALYZER_EXTRACT, [$extract_filename=fname]);

}

This script will save files with the right extension based on the MIME type. Results:

- 362252 files have been extracted

- For a total of 30 GB

To clean up the mess, I removed the HTML files and files < 512 bytes. I reviewed some files but nothing fancy discovered. Even no porn! 😉 Searching for interesting data or “smoke signals” in a huge amount of data is not easy. What to search? Which keywords? It’s like looking for a needle in a haystack! Here are a nice picture to finish: All external hosts used during the conference!

Sometimes but in this case, graphs were generated by Gephi!

What visualization tool are you using here? Graphviz?

Hi Phil,

Not easy to say, it took me 48 hours to have the expected results but some tools were running a few hours, some bad tries etc…

The question is not “how to search” (once you have PCAP data, it’s a win) but “what to search”! You can search for known MD5, passwords from a dict file etc… Just use your imagination!

In fact, all the DNS queries I saw had this bit set to 1!

RT @xme: [/dev/random] What Do Attendees During a Security Conference? http://t.co/YPsxBevh8e

How long did the analysis take with that set-up?

What was the limiting part?

What other questions were you not able to answer with those tools?

RT @xme: [/dev/random] What Do Attendees During a Security Conference? http://t.co/YPsxBevh8e

RT @xme: [/dev/random] What Do Attendees During a Security Conference? http://t.co/YPsxBevh8e

RT @xme: [/dev/random] What Do Attendees During a Security Conference? http://t.co/YPsxBevh8e

RT @xme: [/dev/random] What Do Attendees During a Security Conference? http://t.co/YPsxBevh8e

How many DNS queries with the DO bit set?

RT @xme: [/dev/random] What Do Attendees During a Security Conference? http://t.co/YPsxBevh8e

RT @xme: [/dev/random] What Do Attendees During a Security Conference? http://t.co/YPsxBevh8e

RT @xme: [/dev/random] What Do Attendees During a Security Conference? http://t.co/YPsxBevh8e

@xme haha i’m glad you found nothing

@xme : Does not require auth by the CPVP ?

RT @xme: [/dev/random] What Do Attendees During a Security Conference? http://t.co/YPsxBevh8e

RT @xme: [/dev/random] What Do Attendees During a Security Conference? http://t.co/YPsxBevh8e