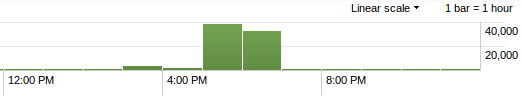

Let me share this story with you. I faced a strange incident last Saturday. My web server was flooded with thousands of GET HTTP requests generated by WordPress blogs. Those connections apparently seemed legit. The “attack“, let’s call it like this in a first time even if I don’t think it was one, occurred Saturday PM between 17:00 & 18:00 PM (GMT+1). A first bunch of requests hit the servers starting from 15:54 and the real food occurred one hour later as you can see on the timeline below.

Let me share this story with you. I faced a strange incident last Saturday. My web server was flooded with thousands of GET HTTP requests generated by WordPress blogs. Those connections apparently seemed legit. The “attack“, let’s call it like this in a first time even if I don’t think it was one, occurred Saturday PM between 17:00 & 18:00 PM (GMT+1). A first bunch of requests hit the servers starting from 15:54 and the real food occurred one hour later as you can see on the timeline below.

The biggest peak of requests was around 325 connections/second. Enough to put my server in trouble but not enough to conduct an real attack. That’s why I’m thinking about a misconfiguration. Another clue that helped me to categorize the incident: it was very (too?) easy to block. The traffic was easy to catch via a simple pattern. How did I detect the problem? I was notified by my tools in place:

- High CPU usage and low free memory on the web server (health monitoring)

- Unusual HTTP traffic (log management)

- Amount of traffic originating from same IPs

- Number of requests/sec (behavior)

The received requests were very simple and hit only one of the websites hosted on the box (www.leakedin.com):

41.203.18.72:36261 - - [09/Mar/2013:15:54:20 +0100] "GET / HTTP/1.0" 200 33393 "-" "WordPress/3.5; http://www.finserv.co.za"

Nothing suspicious in the payloads, even mod_security did not fired any alert during the flood! I also had time to capture some traffic into pcap files, nothing wrong except the amount of requests. Once the problem identified, my first priority was to come back to a stable environment (containment). My first idea was to block all “bad” requests based on the User-Agent. The UA were those used by WordPress: “WordPress/<version>; <blog_url>“. This simple Apache configuration did the job:

SetEnvIfNoCase User-Agent WordPress block

<Directory "/xxxx/xxxx/xxxx">

Order allow,deny

Allow from all

Deny from env=block

</Directory>

It worked during a few minutes but this quick fix only prohibited the remote hosts to grab data from the server. All requests were still processed and returned a 403 instead of 200 error. The second idea was to limit the number of concurrent sessions allowed for www.leakedin.com. This was implemented via mod_bandwidth:

<Directory "/xxxx/xxxx/xxxx">

BandWidthModule on

MaxConnection all 10

</Directory>

This time, it was successful and the situation came back to a stable (managable) server. Time for investigations! I extracted useful data from my log files and did some researches. First, some stats:

- 761395 GET requests

- Coming from 624 unique IP addresses

- Coming from 562 different blog addresses (grabbed from UA strings)

- Coming from 28 different WordPress versions (non obfuscated)

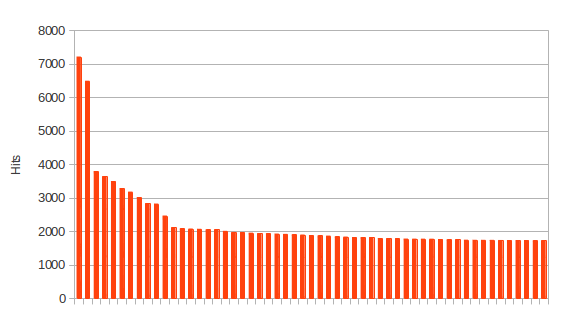

The amount of hits per IP addresses was stable as seen in the char below. The first IP addresses hosted more than one blog (shared platform).

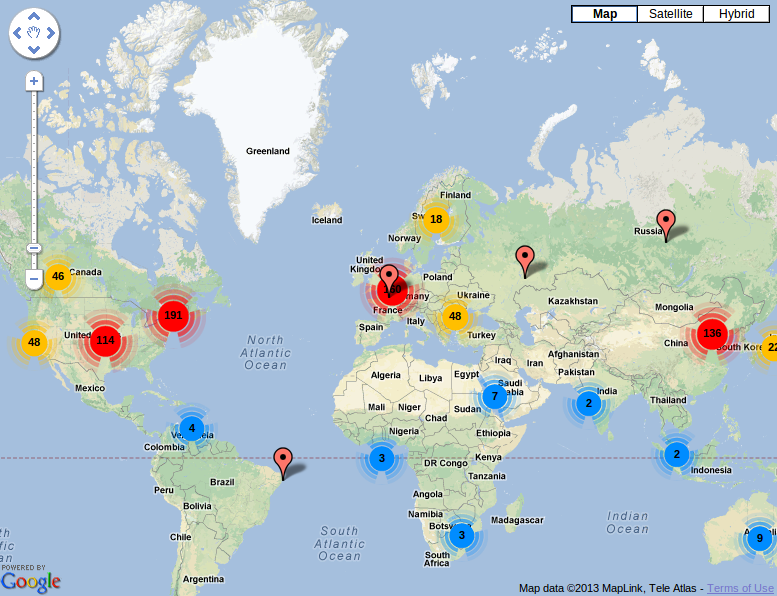

Where are those websites came from?

The logged IP addresses were indeed the one of the blogs mentionned in the UA strings (not fake). Â What about the different blogs? They were not compromized (I just tested some using urlquery.net) and are alive. The content does not help me to understand the issue: different languages, multiple topics, most of them are not related to IT or close to leakedin.com. I searched for “leakedin.com” on them, no hit returned!

Having multiple versions of WordPress (from very old to the latest one) tend to prove that it’s not an exploit. Some blogs that I visited were not updated since 2011! What was the origin of this problem? I don’t have a clue. If you have more information or ideas to share, feel free to post comments!

A final remark: The number of outdated WordPress versions is impressive! The oldest one detected was 2.8.3!

RT @xme: [/dev/random] WordPress GET Requests Flood? http://t.co/CXwRy5Oi3I

RT @xme: [/dev/random] WordPress GET Requests Flood? http://t.co/CXwRy5Oi3I