SEAT – Search Engine Assessment Tool – is a tool dedicated to security professionals and/or pentesters . Using popular search engines, it search for interesting information stored in their caches. It also uses other types of public resources (see later). Popular search engines like Google or Yahoo! (non-exhaustive list) use crawlers (or robots) to surf the Internet, visit found websites, index the retrieved content and store it in databases [Note: A small tool to check when the Google bot last visited your site: gbotvisit.com].

What’s the concern with security? Web robots index everything (in reality, some filters may be defined via robots.txt files, but it’s not the scope of this post). Let’s assume that everything is cached. It means that unexpected content can be crawled by robots and made publicly available:

- temporary pages

- unprotected confidential material

- sites under construction

When you search something via Google, you just type a few words and expect some useful content to be retrieved. But, the search engines are able to process much more complex queries! Examples (for Google):

- “site:rootshell.be foo” will search the string “foo” only in hosts *.rootshell.be.

- “inurl:password” will search the string “password” on the URL only.

- “ext:pdf exploit” will search the string “company” on PDF documents.

If you’re interested in such queries, have a look here. Using complex queries to extract sensitive information from Google is called “Google Hacking“.

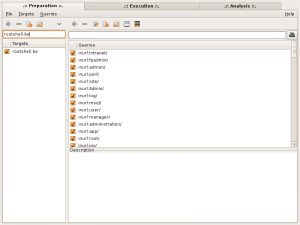

There comes the power of SEAT! It will build complex queries against not only Google but well-known search engines. It comes with a pre-installed list with the most common sites but you’re free to add your own. Pre-configured search engines are: Google, Yahoo, Live, AOL, AllTheWeb, AltaVista and DMOZ. Once the queries performed (it may take quite some time of you configured multiple search), it display the results in a convenient way. Have a look at the GUI:

|

The usage of SEAT is based on a three phases process (the three tabs on top of the window):

- Preparation: You define here your target (a host, a domain name or IP addresses), and which type(s) of query you will perform.

- Execution: You select here the search engine(s) you would like to use and how to query them (number of thread, sleep times, …). Then you start/pause/stop the query. Queries are multi-threaded and may have a side effect: Your IP can be blacklisted (Google has a powerful algorithm to prevent usage of tools like SEAT. Take care if you use it from your corporate LAN. All your company could be temporary blacklisted by Google!

- Analysis: The last step is the analyze of the retried content.

Once the analyze is performed (and it can take quite some depending of your targets/queries), results are already available. For each results, extra operations can be performed (by double-cliking the URL):

- Direct request (Warning: this can reveal your IP address to the target)

- Grab data from the Netcraft database

- Grab a copy from archive.org

- Grap a copy from Google cache

SEAT is fully customizable: your own search engines, advanced queries can be added. Execution can be tuned (number of concurrent threads, User-Agent, sleep time between queries etc…) and, of course, results can be saved (export to .txt or .html files).

Search engines databases are full of interesting information! Like repeated during the last ISSA meeting this week, if you search for information about a target, just ask! SEAT is a perfect tool to conduct an audit or pentest.

A few words about the supported environment, SEAT is written in Perl (version 5.8.0-RC3 and higer) and requires the following modules: Gtk2, threads, threads::shared, XML::Smart. Check out the official website: midnightresearch.com.

Search Engine Assessment Tool – is a tool dedicated to security professionals and/or pentesters . Using popular search engines, it search for interesting information stored in their caches. It also uses other types of public resources (see later). Popular search engines like Google or Yahoo! (non-exhaustive list) use crawlers (or robots) to surf the Internet, visit found websites, index the retrieved content and store it in databases,thanks for sharing the remarkable informations in the post of this blog.