My second training week in London is done. This was a bootcamp organized by a well-known company active in log management solutions. Of course, the training focuses mainly on their own products but some reviewed principles are totally independent of any software or hardware solution and be can applied to any infrastructure.

Logs are critical in your IT infrastructure. They help you to keep your devices and applications under control. That’s why they need to be managed in the right way. All devices generate events to report their health status or problems. The amount of events generated everyday may quickly become unmanageable with unappropriated procedures and tools. That’s why we need to follow the event lifecycle.

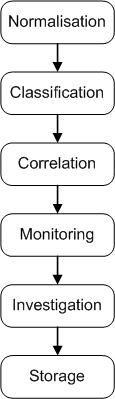

The event lifecycle will increase the accuracy of data, the performance and security. It is based on six steps:

- Normalization

- Classification

- Correlation

- Monitoring

- Investigation & reporting

- Storage

Normalization

The normalization of events received by the devices must occur as soon as possible in the event lifecycle process. Often, protocols used to transport events are based on UDP (Syslog or SNMP) for performance. Even if SNMP is more secure in it’s version 3, Syslog remains a clear text protocol. Second problem, messages generated by the devices contains the same information but formated in different ways (IP addresses, logins, processes, …). Checkout a previous post about this issue.

The normalization occurs on a “collector” and process will pre-process the events, extract the relevant information and reformat them in a common format. Then, the events will be forwarded to a central place using a stronger protocol which will provide encryption and reliability. To prevent loss of data, the collector will be placed as close as possible of the devices. It will also have enough local storage and use a queuing mechanism to keep events locally in case of network outage of failure of the second step in the process managed by the “logger”. Finally, the amount of events can be reduced using filters: only relevant information will be forward to the “logger” to reduce the bandwidth and storage requirements.

Classification

Once normalized, the next step is to classify all events based on the information they provide and information coming from third parties. Example: depending on the source IP address, a criticity level will be added to the event. Events generated from devices in the DMZ will be more critical than those from the internal network. An event received from a public web server will be a higher priority then a private server. If the event source is an IP address and a vulnerability scanner found vulnerabilities for this IP, the priority will also be raised.

To classify events, assets (devices and applications) must be managed. Classification may occur in several ways: per location, per application, per public availability, per operating system and/or applications.

At this step, events can just be stored for later processing (search or reporting). But other operations can still increase their value…

Correlation

I already wrote about this topic. The correlation of events helps the administrators to detect suspicious activities happening in their infrastructure. Event correlation is defined by Wikipedia as “a technique for making sense of a large number of events and pinpointing the few events that are really important in that mass of information“.

Example: if an access is denied for a user 10 times in 3 minutes, it means that the server is maybe under a brute-force attack. Or, an employee who used his access badge to enter the building “C” and who connected a few minutes later on a workstation in a building “B” can mean that his credential were stolen or shared.

The correlation process created new events which are also stored in the repository. Note that correlation may occur only if the process of collecting and normalizing the events was correctly deployed and tested.

Monitoring

All collected events must be stored because they all have a value. Some simple or correlated events need an immediate attention. That’s why monitoring is important. When such event is detected, an action is triggered (script, e-mail, on screen message, …)

Investigation & reporting

If some events need immediate attention (cfr the paragraph above), others have anyway a value which can be revealed later during investigations. By performing forensics search, we do not know what we are looking for. Those investigations are based on a starting information like an IP address or a username.

Reporting will reuse the collected events during a given period of time or for a specific asset and summarize the activity. Reporting can be used to detect some trends or to present the events under more readable forms like graphics for less-technical people.

Storage

Last but not least, a good storage is also important. Some business requirements ask for very long term retention periods for logs (sometimes several years). A good long-term storage solution is mandatory. In this case, we won’t focus on the performance but on the reliability and the ease of extension or migration. A SAN can be a good solution.

Event management is covered by all the compliance programs (PCI, SOX, …) but don’t wait to forced by your business requirements to deploy a log management solution! In most cases, collecting events and process them does not require a complete redesign of your IT infrastructure and can occur in parallel with other projects.