Sometimes during forensics investigations, it can be useful to recover deleted or temporary files transferred by users and/or processes with protocols like FTP or HTTP. Let’s see how to achieve this using pcap files!

libpcap is an API which provides network packets capture facilities. Very common on Unix, there is also a version for Windows environments (logically called winpcap). Captured traffic can be saved to a “pcap file” and then be processed by another tool using the same API. Example: packets capture occurs on a Debian system and is analyzed on a Windows desktop via Wireshark.

Captured traffic can not only display basic information like source IP, source port, destination IP and destination port but higher protocols (above layer 3) can also be logged up to the application layer. How to reconstruct downloaded data from a pcap file?

To successfully reconstruct data, we must be sure that the complete packet size is logged. As an example, on Linux, tcpdump logs only the 68 first bytes of a packet. If it’s enough to log usual TCP and UDP information, useful protocol information can be truncated. To avoid this problem, use the “-s” (“snaplen”) to specify the maximum size of data to be captured. Tip: set this value to “0” to catch the whole packets. The following command will log all HTTP traffic to a file:

# tcpdump -i eth0 -s 0 -w /tmp/eth0.pcap port 80 and \

host www.myserver.com

Please don’t forget that to successfully capture your network traffic, the “sensor” (the host running the tcpdump or any other libpcap tool) must be connected at the right place in your network topology and have enough storage capacity to capture and archive your traffic. In the scope of this blog post, we assume that a simple architecture is in place and that all traffic is correctly logged. Ok, now let’s download a test PDF file:

# wget http://www.myserver.com/sample.pdf --2009-04-15 00:18:32-- http://www.myserver.com/sample.pdf Resolving www.myserver.com... 192.168.1.23 Connecting to www.myserver.com|192.168.1.23|:80... connected. HTTP request sent, awaiting response... 200 OK Length: 82501 (81K) [application/pdf] Saving to: `sample.pdf' 100%[=======================] 82,501 75K/s in 1.1s 2009-04-15 00:18:34 (75 KB/s) - `sample.pdf' saved [82501/82501]

Now, let’s extract the recorded TCP flows. During the file transfert, tcpdump logs all packets matching the given filter (port 80 and host www.myserver.com) but don’t display more information even if they are saved. The TCP flows are based on a client/server model: a flow will contain the client request and the corresponding server response. The next step will be to decode those flows based on the capture data. I’ll give two ways to achieve this: one using a GUI and a second one using the command line.

The graphical way will be performed using Wireshark, a common protocol analyzer available on several platforms (I assume that the tool is already installed, check out the Wireshark documentation for details). Open the generated pcap file and search for our HTTP GET using the following filter:

http.request.uri contains "GET"

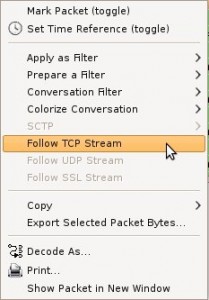

Find the line matching our PDF file, right click and select the option “Follow TCP Stream”:

|

A new window will be opened with the decoded TCP flow. HTTP request and response are displayed in different colors:

|

Select only the server response (using the drop-box) and the raw format. Save the file to a temporary location.

The second way, using the command line, is performed with the tool called “tcpflow“. It does exactly the same operation as above but automatically. All flows are decoded and saved to files:

# cd /tmp # tcpflow -r eth0.pcap # ls *00080-* 192.168.001.023.00080-192.168.001.124-32458

Note that if you perform both manipulations on the same pcap capture, the extracted files will have the same size! Hopefully! If you look at the file (warning binary data may corrupt your terminal session!) , it contains the HTTP headers response and our file (sample.pdf). Let’s strip the headers now.

HTTP headers always end with a double CR-LF pair (in hexadecimal 0x0d, 0x0a, 0x0d, 0x0a). Using a quick and dirty Perl script, we can strip the HTTP headers:

# cat strip_headers.pl

#!/usr/bin/perl

$start=0;

$data="";

while(<STDIN>)

{

if ( $start eq 0 && $_ =~ /^\r\n/) { $start = 1; }

elsif ( $start eq 1 ) { $data = $data . $_; }

}

open(FH, ">sample.pdf");

print FH $data;

close(FH);

# chmod a+x strip_headers.pl

Finally, let’s execute the script with the generated flow file:

# ./strip_headers.pl < 192.168.001.023.00080-192.168.001.124-32458 # file sample.pdf sample.pdf: PDF document, version 1.4 # ls -l sample.pdf -rw-r--r-- 1 xavier xavier 82501 2009-04-15 01:15 sample.pdf

Check the file size (82501 bytes), it’s exactly the size of the original file downloaded with wget! Test it with your favorite PDF reader. Using this method, you should be able to recover temporary or suspicious files without too much problems. Note that some manual file manipulation might be required (Basic Unix knowledge could be a “plus”).

I have tried this and it is not woking for me. Could someone please explain me what this is ? ./strip_headers.pl < 192.168.001.023.00080-192.168.001.124-32458

For me it is very difficult to manage large PCAP file for analysis. So many times i have used PCAP2XML tool, for converting PCAP into XML or Sqlite and then using Sqlite browser i’m executing the queries and finding exact value. Please have a look and let me know if some other tools are available.

Tool: – http://bit.ly/1DxcncQ

Tool Blog: – http://bit.ly/1DxciWG

The best tool to recostruct http content from pcap files is justniffer (http://justniffer.sourceforge.net/) . It uses portion of linux kernel for IP fragmentation e tcp packet reordering. The problem is that there is not a port for windows

Hello Kroweer,

Have a look at ‘passdown’ (https://github.com/freddyb/passdown). It could help you!

/X

Are you aware of a way to extract RTMP streams from a packet capture?

I use http://xplico.org to do this.

cool. can you give me an idea on how to loop the script so that it reads live packet flow and extracts several pdfs?

I wrote up a similar script that I posted on my handlers’ page in 2006. You can find it at http://handlers.sans.org/jclausing/extract-http.pl I like to use tcptrace to pull the stream in question from a pcap. Amazing how often it comes in handy.